Holy Nonads, Part 000 000 010

The second installment in my Nine-Bit CPU adventure

This is a follow-up to last week’s article, where I talked a lot about why I wanted to design a Nine-Bit CPU, but not a lot about what happened when I did. So here I will go into some details of building my QIXOTE-1 processor, but it will not be a class in how to build a computer. I am far from being an expert hardware designer, and my CPU design is far from being optimal or even practical. So I am not the guy to look to if you want to try it yourself. (But if you are really interested in the theory and architecture of CPU design, see the links below.)

Instead, I would like to talk about what it’s like to design a CPU, so far. The challenges, the ideas good and bad that have come up, and the connections I felt to past engineers attempting to do this same kind of thing.

Even at this early stage, I have received a lot of practical and well-meaning suggestions for this project, about everything from a better name than “Nonad” for the data unit, to architectural advice about word and address lengths that are multiples of nine.

I’ve pretty much ignored most of this advice so far, with respect to the design of QIXOTE-1. Not because they weren’t good ideas. But because this project is more of a thought experiment gone too far than it is an attempt to create anything actually useful.

Practical just doesn’t come onto the radar, here in Nonad land. But far from discouraging people from sending me their ideas, please send me more! I really enjoy reading them and plan to do a roundup of some of the really interesting and sometimes funny things people have pointed me to in our next Memo Rewind for subscribers this spring!

Building a Nine-Bit Toaster

A guy name Thomas Thwaites did a fascinating TED Talk a few years back, where he outlined his quest to build a toaster, from scratch. His attempt to create a seemingly simple device like a toaster without the aid of even basic building blocks like plastic, wire, or steel was a brilliant commentary on how reliant we all are on things like the global supply chain, and civilization in general.

Building a new CPU myself felt a bit like that, although I admit that I did not take my DIY quest down to as low a level of abstraction as Thwaites. Others have built CPUs out of lower-level and less obvious building blocks than an FPGA, such as this Tinkertoy computer from 1975, or Minecraft blocks.

That kind of low-level hackery does in fact also appeal to me, of course. But I really wanted to work on the software side of things as well in this project, and the thought of building a working, program-running CPU without the benefit of even having logic gates at my disposal seemed pretty daunting.

If I ever went there though, I would likely follow the suggestion of a reader of the previous article, who suggested I build a system using trinary logic. In addition to being a strange choice that avoids even the basic standard of binary data, a three-state system would fit well thematically into the world of a nine-bit computer.

Fail Your Way To Success

Maybe QIXOTE-2. The killer reason to stick with my FPGA plan for the hardware though turned out to be simulation.

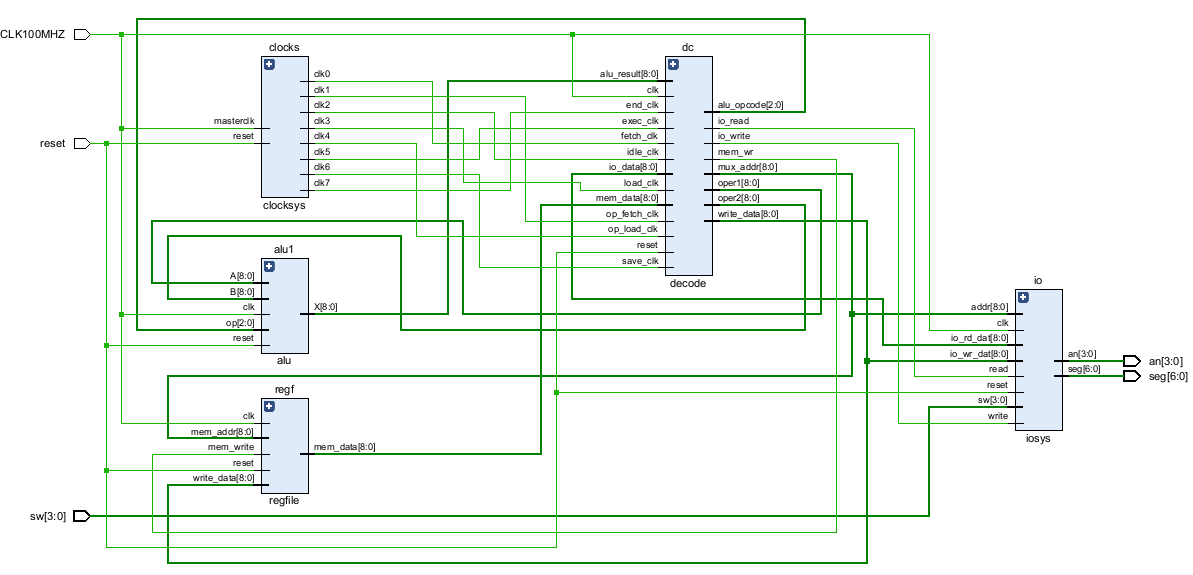

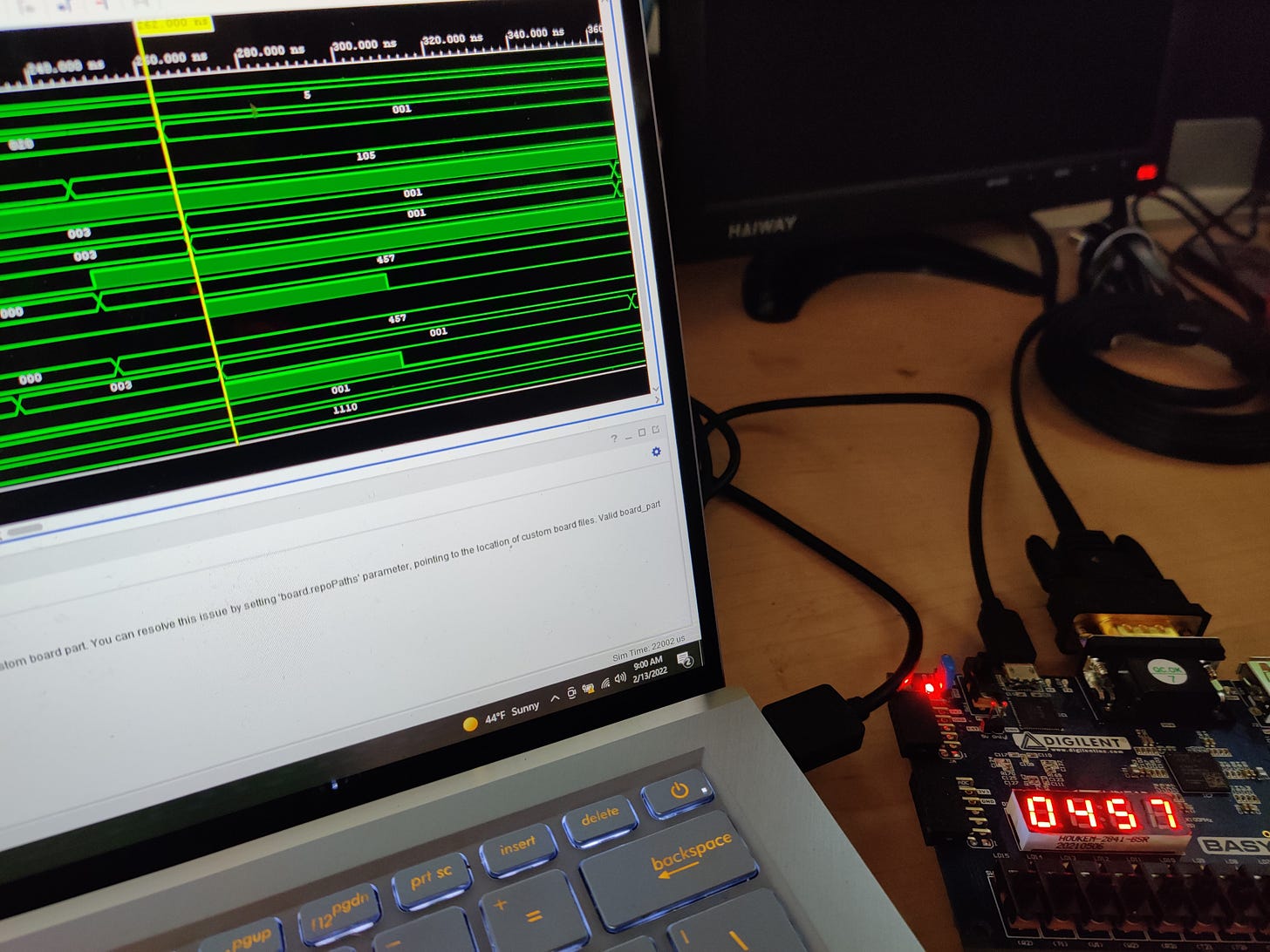

I began to cobble together things I was sure I needed, like an instruction decoder, an arithmetic/logic unit (ALU), registers, memory, and I/O. But getting them to actually work together required hundreds of trial-and-error modifications on my part, and that could be done relatively quickly via behavioral simulation.

It would have also been possible to try to run each circuit attempt on the actual FPGA hardware, but the cycle of synthesizing, routing, creating a bitstream and downloading to the FPGA board took about five minutes each — which is considerably longer than the modify/run/debug cycle when simulating a circuit. And of course, simulation gives you a full view as to what is actually going on, versus the mysteries of not having any LEDs light up due to a bad hardware implementation.

Our computer-designing predecessors would have killed for a five-minute turnaround time between hardware modification and the testing of it. I thought about what must have transpired during the building of early computers, back in the middle part of the 20th Century. New hardware then did in fact require a lot of (time-consuming) soldering by hand, and so they built generic pieces ahead of time, like single-bit registers, logic modules for various functions, decoders, and so on. And then patched these all together, endlessly moving wires around as they debugged.

So there actually could have been situations where these old relay and tube computers could be reconfigured in five minutes or less. But not if you wanted to make some fundamental change, like the size of a data bus or the overall timing of the system.

If I had coded it to be configurable (which I did not), the QIXOTE-1 could morph from a 9-bit computer to say a 16-bit one with just a change to a few lines of Verilog parameter code. Then you could recompile, and run the new, bigger machine just a few minutes later.

But I wasn't really interested in enabling that kind of configurability with my project. Improvements in design productivity like this though did let me side-step most of the costs associated with making dumb design mistakes and poor architectural choices that my predecessors would have incurred. Including, what I would assume were mistakes ending in smoke, and fire.

There was nothing really to stop me from just trying many things, even dumb things, one by one. So I just kept building bad CPUs until I built a good one.

Cheap, programmable hardware and ability to simulate systems before building them opens a whole new world of possibilities for someone interested in experimenting, as the cost of failure is low.

Early computer designers meticulously planned out their designs mathematically, pretty much out of necessity. They built number-crunching machines, meant for high-priority wartime tasks like code-breaking and artillery calculations. They really could not afford to make mistakes before building things by experimenting, and the idea of creating a computer for entertainment, or even artistic purposes was far off.

Obviously, we did get to the point where computers were more than just mathematical solving machines, but many of the original design decisions that were made for the earliest computers still survive to this day. Things like Von-Neumann and Harvard Architectures, SISD program execution, and organization of things into specialized hardware like instruction decoding and ALU.

There are very good reasons why these things have remained part of mainstream computer design for so long. But it is also a very good idea for people to revisit these decisions once in a while, and see if something else can also be created given the new technology and associated freedoms we now enjoy. My advice to anyone interested in experimenting with hardware is to take advantage of all the progress that has been made in hardware development methods, and go build something different!

A Simple But (Surprisingly) Normal Machine

I do like to think about weird, non-mainstream computer designs, like say a less-synchronous kind of system that operates on dataflow principles. Or non-deterministic hardware that operates on probabilities. Machines with multi-dimensional, or maybe even fractal program counters that can be at multiple locations, or maybe stack levels, all at the same time. Fuzzy logic. Trinary or analog systems. Self-modifying code machines. Neural processors.

Many have dabbled and even built computers with strange architectures like these. (Neural nets and machine learning systems being a notable growth area today) A big issue with weird computer architectures though is that it can be hard to create a straightforward way to program and debug them.

Which is a big reason that in the end, I decided not to make any radical departures from Von Neumann / SISD architecture for QIXOTE-1. At least immediately.

But also, because I felt a need to actually understand the rules of creating a processor before I decided to break any of them, maybe.

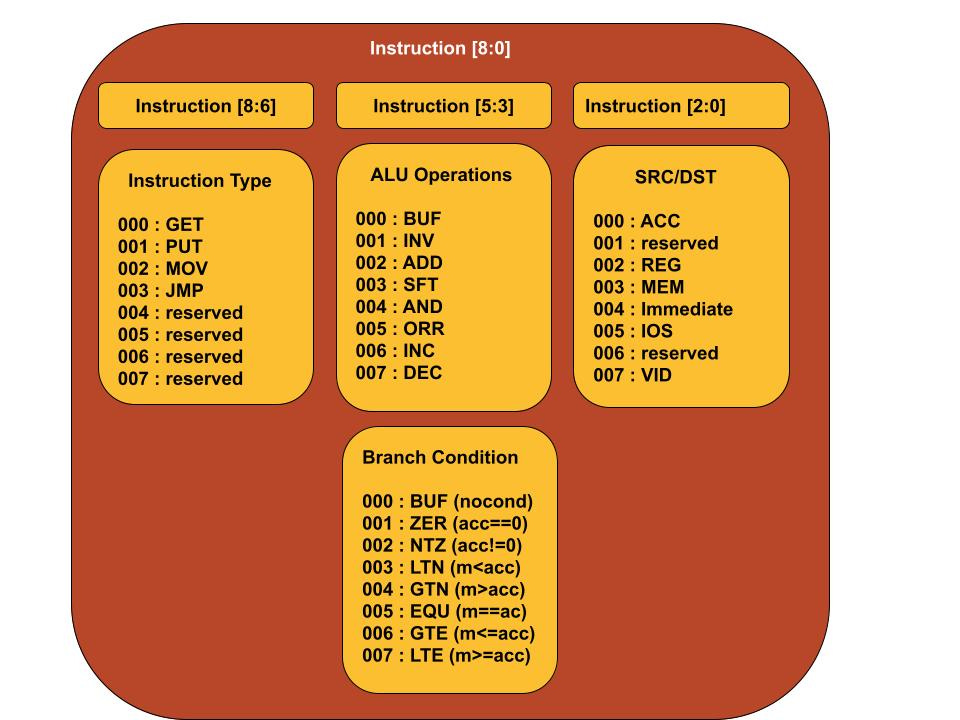

So once you get past the nine-bit design, the implementation of QIXOTE-1 turned out to be rather conventional. It’s partly inspired by the PDP-8, a 12-bit octal machine that is famously considered a very early RISC machine. The first 3 bits of the instruction word on the PDP-8 are the instruction opcode, so it is often said the machine only has 8 instructions. (It is not quite true though, because instruction 7 breaks out into a myriad of microinstructions)

QIXOTE-1 also decodes the first 3 instruction bits as opcode. It currently has just four instructions: Get data, Put data, Move data, and Jump. This is also a bit of a lie, because each of these can perform their operation either with an inlined ALU operation (in the case of GET and MOV), or some condition (in the case of PUT or JMP).

Also, the location that things are GET-from or PUT-to is quite flexible and can be memory, a register, an immediate operand, the accumulator, or a mapped I/O device. So really a lot of instruction combinations are possible. (I’d say, about 192 or so today)

Every instruction except Move is also followed by a nine-bit operand that can be a literal/immediate value or memory, I/O, or register address. If you wanted to get picky, instructions are either 9 or 18 bits, actually.

But it is nonetheless a tiny, bounded machine. Quite simple, with no cache system, pipelining, prefetching, and so on. I have also not implemented extended memory addressing, so the address bus is only 9 bits. I did get an excellent suggestion to make it a three-Nonad/27 bit bus, fitting nicely into a general “three of three” theme I have going on. But that is another QIXOTE-2 candidate idea I think.

This constrains things quite a bit. I intend to explore what I can do in the 512 Nonads of memory afforded to me with the limited 9-bit address space I have before hatching any more ambitious schemes though. (The source/dest field of the instruction however allows for slightly more memory to be addressed, in the form of non-contiguous memory pages. I intend to implement a simple video memory space this way)

A One-Machine Program

There were a lot of bumps on the road from thinking about building a processor, to actually getting one to run on real hardware. I hope to go into a few of the interesting and sometimes amusing ones in the next part of this series. But there did eventually come a day when I had something that was both functional and synthesizeable (these were mutually-exclusive it seemed, for a good while).

I loaded a version of QIXOTE-1 along with the test program above onto my BASYS 3 FPGA board. It is a simple program that goes out to a memory location, gets a Nonad (octal 456), increments it, writes the result to the LED display (mapped to I/O address 001), then loops in place forever. (maybe I need a HALT instruction?)

When I loaded it and hit reset to start the CPU back at address 000, I was treated to a bright-red (and importantly, correct!) octal “457”, as shown in the earlier picture.

I really can’t describe how something this meager could feel so satisfying. It is very much the “Hello World” phenomenon I discussed in an article earlier this year. Just the joy of bringing something new into the world I guess.

What particularly struck me was the thought that while I had written many programs in the past that only I had run, this was likely the first time I had written a program that no one else could run.

That would be impossible, because (at the moment at least) there is exactly one machine on Earth capable of running it. I’ve told old-man stories before about how in the 70’s I was the only one I knew with a computer in his house. It felt special, and I’d be lying if I said there wasn’t at least some of that special feeling coming back to me, working with this one-of-a-kind machine.

But I am not really interested in the having of an Only-Thing, just in the making of one. When the code for this project is in less embarrassingly-bad shape, I’ll put it on GitHub or somewhere central, in case someone gets really bored and wants to build their own Nonadic machine based on mine.

So who knows, maybe even QIXOTE-1 will not be unique forever. In the end, nothing is guaranteed to be one-of-kind, but you.

Explore Further

Next Time: So you want to design a simple CPU. What could go wrong? Plenty. I run down some of the weird and sometimes wonderful ways things can go off the rails on a journey from processor concept to processor reality. More hardware design adventures, good and bad in part three of my Nine-Bit CPU saga, Holy Nonads!

Stay in touch with nerdy computer adventures, Nonadic or otherwise, by subscribing to the Mad Ned Memo! Each issue features tales and discussions from the world of computer engineering and technology — past, present, and future. Follow the link to see more, and if it’s your kind of thing, consider subscribing! It is cost-free and ad-free, and you can unsubscribe at any time.

The Mad Ned Memo takes subscriber privacy seriously and does not share email or other personal information with third parties. For more information, click here.