Holy Nonads! Yet Another Nine-Bit Article!

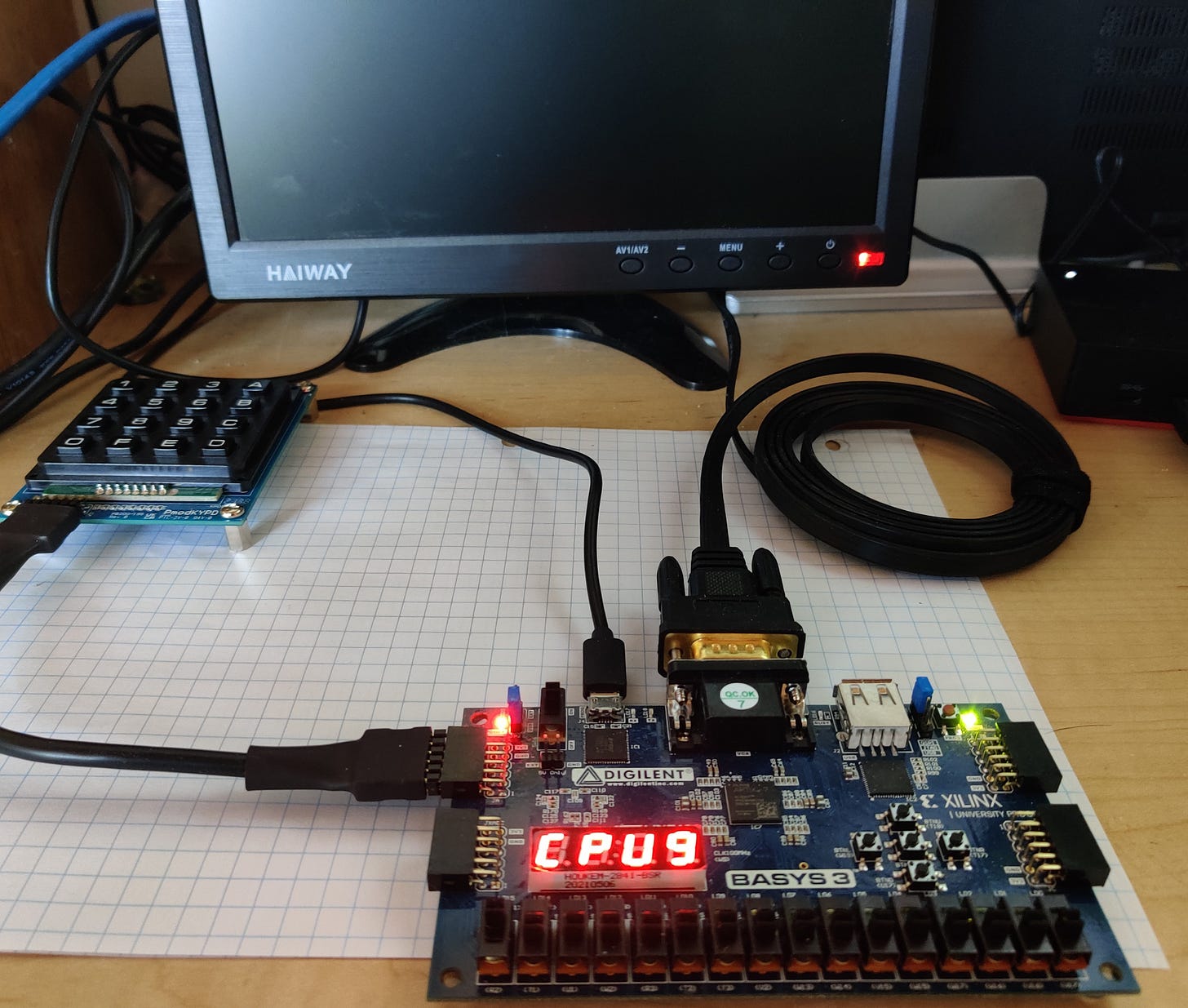

Part 3 of My Quixotic Quest to Build a 9-Bit CPU

Hello and welcome to part three of my “Holy Nonads” article series, which chronicles my attempt to build a nine-bit computer. So far I have talked about why I wanted to build this odd machine, and a little about the design of the hardware architecture.

I have not figured out yet how many parts there should actually be in this series, but I will confess that thematically, nine does appeal to me. Before you go running for the unsubscribe button though, I do plan to relent after this article and move on to other, non-Nondadic topics for a while. I was thinking maybe it should follow the three-of-threes theme: a trilogy of trilogies, with some resting time in-between. You know, like Star Wars, except in linear order, and without Jar-Jar Binks.

So if you tire of the whole nine-bit saga, hang in there. But for whatever number of people out there who are actually into building weird CPUs, I do have some advice to offer, next.

Timing Is Of The Essence

I spend a fair amount of my work life dealing with hardware description languages like Verilog, which I suppose gives me a little bit of a leg up with trying to create FPGA designs. But not as much as you might think. In reality, I do not write much Verilog code, and I certainly have never attempted to do anything as complex as a CPU.

So I found out early about all the things I had under-appreciated about building a CPU.

Like timing.

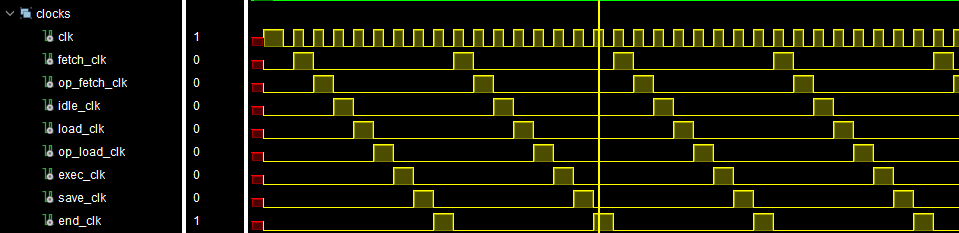

I was of course aware that things do take time to happen in silicon; inevitable delays to propagate signals through wires and to process them through active components like the transistors that make up logic gates and so on. Also, I understood the idea that running a CPU instruction is broken down into steps, like fetching the instruction, decoding it, executing it, and saving any result somewhere.

I naively thought that I should be able to do the above steps in four clock cycles, for all of my instructions. It seemed like plenty, given modern processors could probably due it in less, thanks to a lot of sophisticated design enhancements to pipeline all the operations to happen in parallel, as much as possible.

The more clock cycles it takes to run an instruction, the slower your machine is. Since I was not particularly worried about speed though, I didn’t mind having some clock phases — to make my timing life simpler, and not have to think about implementing pipelines and branch prediction and what have you. Four seemed like enough, but I kind of forgot about this little thing called memory latency.

Kind of embarrassing actually, because I spent the first five years of my career designing memory systems for mainframe computers at Digital Equipment Corp, and there were lots of latency issues to contend with.

Give Me a Second to Recall

This was the mid-1980 peak years for the minicomputer companies, and DEC was a giant whale, sucking in new college hires like krill. We were all given responsibilities far above what we probably should have been doing as junior employees. In my case, I was asked to design a chip that handled interleaving, refresh, and self-test of main memory. My chip talked to other chips in the cache system, which talked in turn to instruction and data units in the CPU.

I and the other new hires did what I would say is a pretty good job getting working designs together, given none of us had ever designed a chip before. But our inexperience soon became apparent when the architects of this project began to calculate machine performance, and realized there was something like a 24 cycle latency for data access from the CPU to main memory. With predictable performance consequences.

Each of us in the path from memory to CPU had made decisions about clocking and control in our chips that made things simple, but caused there to be way too many stages of logic. When our management found out about it, they freaked out and put our system architect Bob Stewart in charge of reducing memory access time, and I think we eventually got it down to something like 8 cycles. This is where we learned all sorts of neat tricks about they didn’t cover in Digital Logic 101, like one-hot state machines, and flow-through latch design.

I was thinking about this as I worked on getting my CPU to actually fetch stuff from memory. I thought about how DEC really should have had their management act together a little better and supervised the junior people earlier on what they were actually doing. But I also thought about how I had basically forgotten about memory latency in general, in spite of my many trials with it.

These mainframe CPU designs (I then remembered) were full of control signals to do things like gate clocks and stall execution until data was ready, which was a design idea I had not planned for in the least. I considered modifying my CPU to implement a stall condition (and may still at some point.) But in the end, I opted instead to expand my clock phase system to 8, so I could keep things simple and have a guaranteed overall instruction period without stalls and gating, and so on.

Even that turned out to be a challenge. The Artix-7 FPGA chip I was using had built-in BRAM structures, that could deliver memory data in two clock cycles. That’s incredibly fast main memory access, by any CPU clock standard. I had the luxury of not needing a lot of memory in general for QIXOTE-1, so the on-chip memory could be my main memory (which also avoids the need to build a cache of any sort).

But even two cycles of delay can add up, when you have to do things like fetch an instruction from memory, possibly operands as well, do operations on them that may also involve memory access, then store them possibly back to memory again. Again, the right thing to do would be to build a system that waited for memory access to complete, and have a variable number of clock cycles per instruction.

But I didn’t go there. Instead, I reverted to inferred memory structures comprised of registers, which are the fastest form of memory you can get, delivering read data in just one cycle. But it is an expensive form of memory, and more than a few K of it would exhaust the resources of the Artix-7 chip. For now, that is fine, because the 512 Nonads that are addressable in the system fit fine in registers.

But as my software ambitions grow, the whole memory addressing/dealing with latency thing will eventually have to be dealt with.

Why all the reluctance to fix it? A big reason is that I am a one-man show, and I want to have some episodes of this show be about things other than the CPU hardware: like creating assemblers and languages, working with video, and so on. If I am spending all my time figuring out the CPU timing, I never get to do those. So I am more than willing to trade CPU speed for a simpler design.

The other reason though is probably trauma. The whole area of memory latency and signal feedback like clock gates and stalls touches on an area known as Control Theory. This is a dark chapter of my college education that involves the worst professor there ever was (anyone claiming they had a worse one can fight me).

Fourier, Frogs, and an F on the Final

Control Theory is the study of complex systems that have feedback and latency in them, using mathematical principles (Fourier series, in many cases). Feedback systems are a notoriously difficult thing to model, but a lack of understanding of the principles involved can lead to disastrous results, like bridge collapses or rocket explosions. So as an Engineer, you ignore them at your own (and maybe other people’s) peril. As such, I harbor no beef against the Control Theory area of study itself, as long as it is not me doing it.

My experiences with it in college were disasters in their own right though. ECE students at my school were required to take a course called Signals and Systems, a hybrid Control Theory and Digital Signal Processing course that was taught by someone from the Biomedical Engineering department. This Professor X’s publish-or-perish project was all about modeling the renal output of frogs using Control Theory, and he had tailored his hapless grad students’ research projects around this material.

And it wasn’t just the grad students that got to be part of the frog-piss fun. With just two weeks left in the course, our professor had become increasingly irritated that the majority of the (mostly ECE, not Biomed) people in his class had scored 40 points or less on his exam. Out of 200 possible. So he declared that the course was now a lab course, and we all needed to complete a lab experiment in his bio/frog lab for 30% of our grade, something involving electrically probing Petri dishes full of different solutions.

Since there were only two weeks left in the semester, there were virtually no slots available for all his students to use the lab and equipment, and the grad students of course had priority. So I found myself in his lab on a Saturday morning at 8 AM, grumpily probing dishes of god-knows-what, while Biomed grad students nearby took frogs out of buckets and chopped their heads off with a little mini-guillotine.

I flunked this course, in spite of finishing the lab. The frog-piss thing should have tipped me off; this professor was a well-known bad egg and my friends all wisely changed their status to “Audit” on this course one week in, but I stuck it out like an idiot. We all had to retake it, again, in the summer, with a different professor. So I had then and continue now to have a fairly negative impression of the whole Signals and Systems area.

But, you know, it is relevant even so. Lack of proper system modeling of things like latency and feedback loops can doom more than bridges and rockets. It caused problems on the big mainframe project I worked on all those years ago, and continues to cause problems on the little CPU project of mine today. I think I was prematurely dismissive of this course because of the mainly linear/analog applications of the math, and of course the horrible teaching of it in general.

I guess if I were to give a curriculum suggestion it would be for ECE students to take some variant of this course that makes the general principles more accessible to the designers of general electronics and digital systems. But being a self-described math-hater, you probably shouldn’t take academic advice from me.

The pragmatic advice I feel more qualified to give is, if you plan to design your own weird CPU, be sure to think up-front not only about what things are connected to what, but also about how long it takes for information to get from place to place, and what happens at the system level as a result.

The System Eats Itself

I had some other less-consequential timing problems after resolving the memory latency issue, but eventually the CPU began to run test programs. This is where things got interesting, because I started encountering situations where I was dealing with two things of unknown quality: my hardware, and my software. And it was sometimes tough to tell where the problem was. On more that one ocassion I cast unwarranted aspersions on the quality of my CPU hardware, only to discover a programming error on my part in the assembler test program.

The shift from hardware testing via simulation to testing via software actually running on the hardware has been fascinating for me to observe. It has problems of its own, but the software aspect opens up a whole new world to explore on this project. The next trilogy of this series (after the previously-mentioned breather) will move out of the hardware-centric focus of the series so far, and delve into some of the design issues and challenges of creating software for a brand new machine.

I’ll wrap up for today though by mentioning one problem where simulation debug was really an essential to figuring out what the software was doing. Early QIXOTE programs seemed to do just fine with reading memory, and sometimes writing. But sometimes not. I couldn’t figure out what was going wrong. But then when I ran a simple program and inspected memory contents in the simulator, I found out why: QIXOTE-1 was modifying its own program!

It turns out the write signal to memory was level-sensitive and not edge-sensitive — for the non-hardware people here, it just means that instead of paying attention where to write memory contents at a precise moment of time, it was doing it over a span of time, while things were in flux. So random areas of memory were getting overwritten, including the executing program code itself.

Leading to predictably unpredictable results. This is not actually that unique a scenario, I think many have encountered similar situations with corrupted or self-modifying programs in their coding lives.

It was a simple fix, and memory reading and writing seems to be working fine now. But I will say I felt a little proud of QIXOTE-1, trying to break free from the confines of its programming and spread Nonadic glory through the universe!

Explore Further

Core War - (A 1984 game of battling memory-modifying programs)

Next Time: Something for the technical managers out there. For those who have the unfortunate duty of having to let someone go, it can be a stressful situation for everyone. It is an outcome that in most cases should be avoided, but it does of course happen. And there are even benefits, for all involved. Sympathies and silver linings for the Fire-ee and Fire-er coming up in: The Sunny Side of Firing Someone

Want something to actually look forward to in your email? Not everything there has to be shipping delay notices and billing statements, you know. Subscribe to the Mad Ned Memo to get your regular dose of nerdy computer engineering tales, discussion, and perspectives, delivered to your electronic door. It’s ad-free and cost-free, and you can unsubscribe at any time.

The Mad Ned Memo takes subscriber privacy seriously and does not share email or other personal information with third parties. For more information, click here.